Welcome aboard, captain!

Titan IC is waiting for you

The fate of the crew

... is now in your hand

How long can you survive?

Many puzzles are waiting for you

Welcome aboard, Captain!

Titan IC is waiting for you

The fate of the crew

... is now in your hand

How long can you survive?

Many puzzles are waiting for you

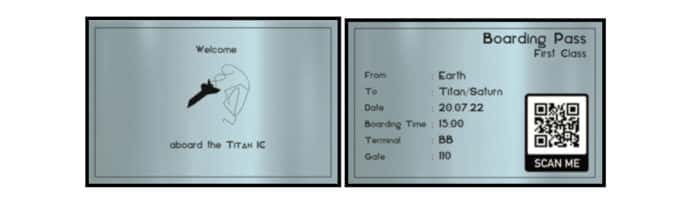

Your journey with Titan IC begins NOW

Earth – Titan/ Saturn

Date: 20.7

Boarding from: 3 pm

Terminal: BB

Gate: 110

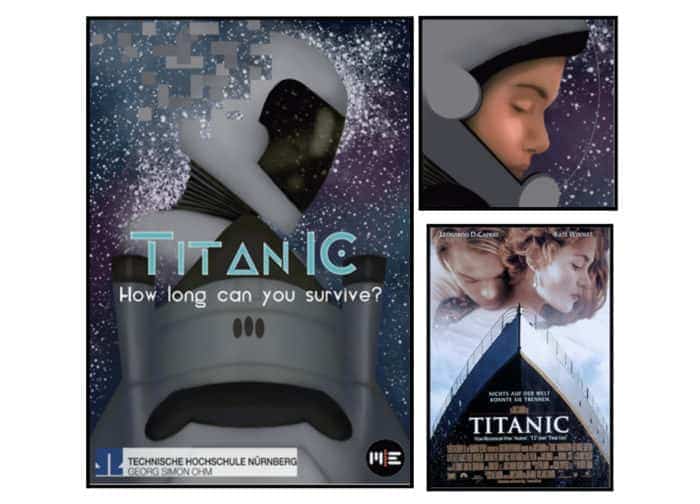

The basic idea behind “Titan IC” was to develop a VR game with escape room elements in Unity. For deeper immersion during the interaction with the digital elements, the idea came up to use the in the Meta Quest integrated hand tracking. The goal of the game should be to keep an unsolvable situation going as long as possible.

Additionally, the functionalities of Patrick Harms’ Vivian Framework should be used in it. Since the framework is designed to design and link interaction elements, it lent itself to creating a variety of puzzles based on these elements.

Now you are the captain on your own spaceship

Welcome to Titan IC

In the following, the theme “spaceship” was agreed upon, as it offered both the possibility to design several control elements and left enough room for interpretation to remain creatively flexible. In addition, the situation of a “collapsing spaceship” would also justify an increasing difficulty of the puzzles.

During further brainstorming, the idea of taking a pulse reading in real time that would affect certain element in the game formed, which sparked interest among the team.

The design of Titan IC

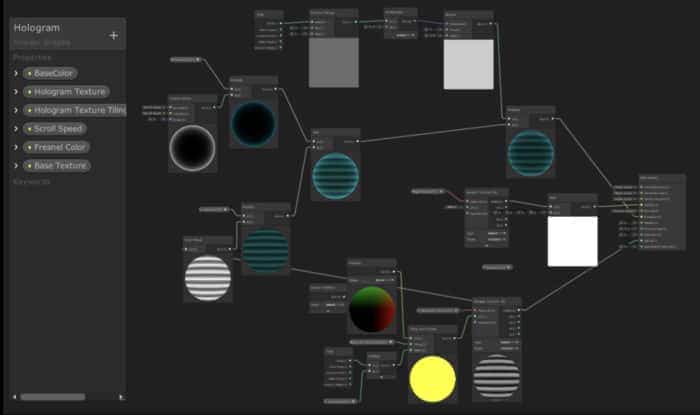

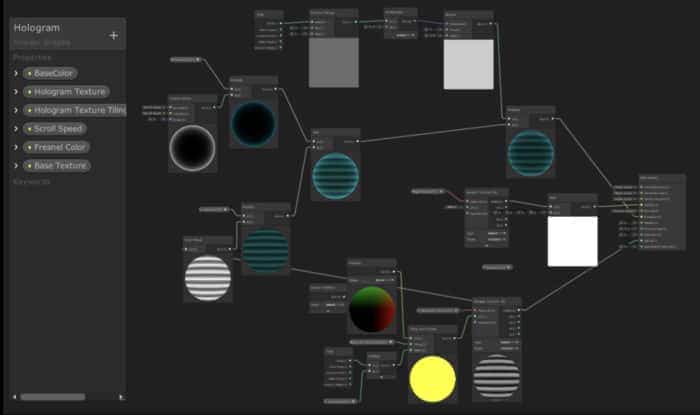

“Titan IC” can be categorized under the genre of science fiction, and that’s exactly what the design is supposed to be based on. In the current state this concerns the room, puzzle, GUI and font. The latter was the Elianto font, which differs from ordinary fonts only in a few letters. The future logo will also be created in this font.

When it comes to space and the objects within it, including puzzles, approaches to specific styles, such as cyberpunk, have been discarded. Instead, you can find a fusion of contemporary and futuristic elements. This makes it easier for the players to find their way around the scene.

For the second section of the semester project, remaining goals from the first semester were carried over and new goals were defined. These goals included

- Room customization

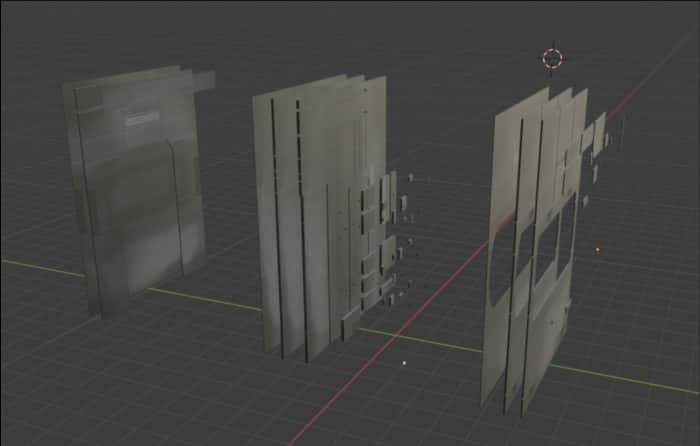

The look of the room was improved, firstly by giving the walls a sense of depth by re-modeling the texture, and by incorporating particle systems. Furthermore, it is possible to interact with objects in the room. So the player can take the chair and throw it around the room.

- Revision of the scene structure

The game has been divided into start screen, tutorial, intermezzo, main game and game over screen to make it easier to separate game functions.

- interactive puzzles about the Vivian framework

About over the semester, five fully functional Virtual Prototypes were implemented using the Vivian framework, acting as puzzles in the main scene. The structure of the state machines was kept uniform so that the interface with the Puzzle Manager was easy to implement (States: Default, Error, Success). When needed, such as complications with hand tracking, the puzzles were revised and adjusted. The puzzles were additionally assigned random tasks and timeout times in their states to generate variety. However, since the Vivian framework is designed for simpler virtual prototypes, the implementation of some puzzles turned out to be difficult, costly and time-consuming, resulting in very long source codes.

- Application runnability

The project should not only run on high-end gaming PCs, but also on mobile laptops. Several laptops were prepared for this by updating the USB drivers, graphics drivers, and BIOS for the VR application. These update processes alone on both laptops were very time-consuming, the correct drivers had to be searched for and the BIOS updates failed repeatedly. The graphics and render settings of the project were adjusted for a higher frame rate, for which a lot of experimentation was done. Special third-party tools should enable both native porting to the Meta Quest and wireless streaming. The port itself required some basic measures to be able to load software onto the headset at all. The live streaming feature worked smoothly and without latency after a few attempts via a local router setup.

- Optimization of hand tracking

Hand tracking as a central feature of the project should be further improved to work more error-free. For this purpose, additional colliders were added to individual wrist points and changed in size as well as shape. This required a few test runs to experiment with it in VR. A new hand model was implemented to visualize gloves of a space suit.

- a functional puzzle manager

A month of development of Puzzle Manager was spent on creating a version that would directly affect the prototypes. To optimize the script, we switched to a version that responds to statechanges and adjusted it over the course to changes in the rest of the script.

- Display relevant data via GUI

The original version of the countdown was already functional. The Expiration of time was moved to the background and replaced by a percentage display. The calculation of the countdown has been restructured and adapted to the new requirements.

- Display of game performance in game over screen

The creation of the scene was quickly realized. The structure of the text fields had to be adapted to the Game Over Manager. Updating the Game Manager variables, which are then read out, required additional time, which was repeated during further development and when adjusting associated scripts.

- Development of a pulse measurement app

At the time of the presentation, a functional app was available on the smartwatch that could transmit the heart rate of the players* to the PC via WIFI. Comparisons with Android’s own heart rate measurement or in the comparison with that of an AppleWatch show that the measured results were accurate.

- Establish a network between the app and Unity:

The implementation of the local server was done via the Riptide Network by Tom Weiland. Due to the fact that this is the application already simplifies local networking and makes it intuitive to use. intuitive to use, the design of the local server and the communicating server and the communicating script in the main application required little Time. Since it was not possible to establish a connection between client and server, the server was not included in the final version.

- Sound design (voice lines, ingame sounds)

The first version of an implementation of sound effects and ambient sounds in Unity took a relatively long time because basic research was needed. This implementation was subsequently replaced by a team member’s sound manager. It also looked at 3D audio in VR to adjust sound sources depending on the user’s position in the room.

The voicelines were recorded individually according to the underlying script and then edited and rendered. All sounds were loaded into the application in stereo. Ambience was normalized to -6db. Sound effects were normalized to -6db or -3db depending on initial volume and sound presence. Voicelines were normalized to -3db. Acoustic effects such as reverb, chromatic autotune and voice optimization were added, as well as an artificial-looking robot sound was to be achieved. Each of the 50 or so voiceline files had to be individually adjusted, normalized, cut and edited in Adobe Audition, as well as manually integrated into the prototype code.

The voicelines were recorded individually according to the underlying script and then edited and rendered. All sounds were loaded into the application in stereo. Ambience was normalized to -6db. Sound effects were normalized to -6db or -3db depending on initial volume and sound presence. Voicelines were normalized to -3db. Acoustic effects such as reverb, chromatic autotune and voice optimization were added, as well as an artificial-looking robot sound was to be achieved. Each of the 50 or so voiceline files had to be individually adjusted, normalized, cut and edited in Adobe Audition, as well as manually integrated into the prototype code.